Log file management: Collection, storage and analysis of computer logs

Stages of log file management

In computer science, the term log or log file (whose meaning is logbook or simply newspaper) indicates a file on which events are recorded in chronological order and is used to indicate normally:

- the chronological recording of the operations as they are performed

- the file or database on which these recordings are stored.

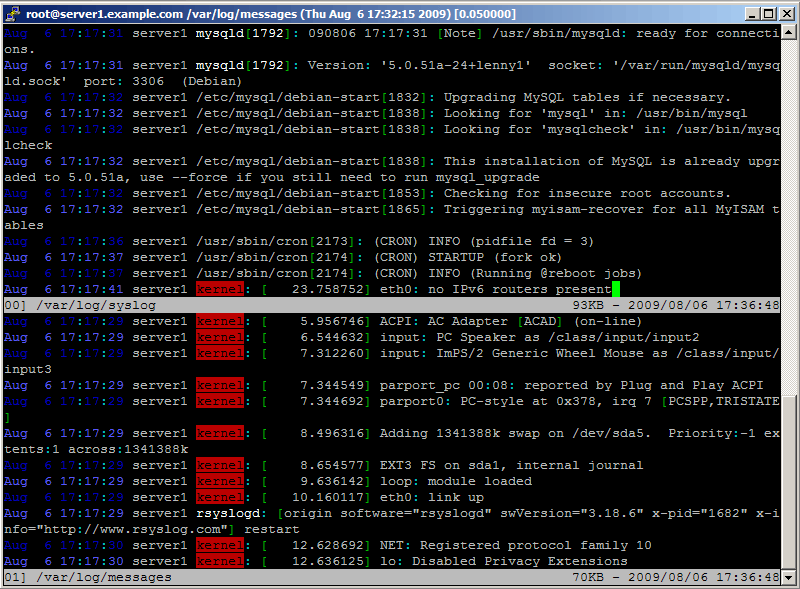

Considering the aspects of detection and diagnosis of errors, it is important to emphasize that the logs are an indispensable support tool, just think of a process belonging to a distributed application, which through its suspension, could lead to the stalling of the entire application. Then through the analysis of the logs, generated by the operating system, one could go back to which process led to the suspension of the application and then solve the problem.

From this it is clear the importance of the log files, but to be able to extract from them all the useful information it is necessary a correct management. We can therefore divide management operations into three distinct moments: collection, archiving and analysis.

Collection and storage of logs

Most applications during their execution record their operations in special log files. Now the result is a considerable amount of data that, if kept forever would be unmanageable or difficult to handle. Therefore, to keep the portion of the disk occupied by the logs within reasonable levels, it would be convenient to carry out, with a specific frequency, a copy, compression and “emptying” of the logs. These operations, generally performed in a combined manner, are indicated with the name of rotation.

In some cases it is useful, as well as copying the information contained in the log into files residing on the same machine through the rotation operation, to store these copies on other machines connected to the network. Let’s look at some practical examples to emphasize the importance that this backup operation can have.

As you can imagine recording the operations performed on the server is an activity of fundamental importance, both as a source of useful information to resolve any malfunctions, and as a form of system control, verifying that it is not undergoing attacks or intrusion attempts.

A skilled hacker will certainly be able to best hide his traces, for example by deleting the signs of his work from the files where the log server records all the activities. The need therefore arises to protect these files in the best possible way, and therefore not by storing the log files exclusively on the machine that you want to monitor: in case of violation of the same they would be at the full disposal of the hacker and therefore easily modifiable.

A first possibility could be to keep a paper copy of the logs themselves. Certainly the hacker, unless physically accessing it, could not delete his traces from log prints. However, this choice would lead to an excessive increase in the paperwork, as well as practical problems for their analysis and archiving.

Discarding this hypothesis, one could think of using a second log server, so as to replicate the logs that are locally stored, even remotely. In such a case the hacker should erase his tracks in two different places. Furthermore, assuming that the remote server log foresees all possible precautions for system security purposes, including the installation only of strictly necessary software, it would not be so simple for the hacker to delete his traces also from it.

It is also assumed that the server is not directly connected to the internet, but is accessible only from the internal network, thus minimizing its exposure.

Another example in which the collection and storage of logs plays a fundamental role is that of distributed applications. Consider the case in which you are testing an application whose components are running on different machines connected to the network, to analyze the operation we should go to consult the logs residing on the various machines individually, considerably slowing down the analysis phase. With an archiving policy we could think of designing the application so that all the logs generated are sent, with a specific frequency and automatically, to a log server, so we would speed up the analysis phase being able to access all the log by simply consulting the server.

Another case is to keep log history files coming from testing activities or simply from the past history of the application / system execution (for example to show that that application in the last 4 years has suffered only a certain number of failures).

Log analysis

For the management of the logs it is necessary to have established a log storage policy, therefore once these are archived it is however necessary to extrapolate the information of interest through their accurate analysis.

In fact, it is only through careful analysis that, for example, a network administrator will be able to check which users have accessed the network and if there have been unauthorized users.

Or think of the website administrator of a company that sells electronic components on the internet. Through the analysis of log files, it will be able to understand if the site is really an effective tool to acquire new contacts or clients or just a little frill that is not functional to the achievement of the objectives that we have set ourselves. The analysis of log files is therefore an essential component of any search engine marketing strategy.

It is in the log files that you will be able to see which search engines the visitors arrived from, which search keys are actually used in the engines to get to the site, which search key the person who purchased a particular product used. what actions the person arrived at the site through a certain search key compared to the person arriving for a slightly different search key.

Always referring to the administrator of the website, in order to simplify the management of the log files to be analyzed, he can use a series of very “marketing oriented” tools for analyzing log files. The data that will be able to obtain from the logs do not serve only to understand which engines and which keywords generate more traffic but also offer a whole series of fundamental data in order to understand the most efficient way of “modernizing” one’s site in order to do find the visitor the information they are looking for, or understand – from the navigation paths – in which phase of the purchase process you find a user who uses a search key instead of another.

Not only in the web field, as we have just seen, but also in other areas it is possible to find numerous applications that allow, through the analysis of different types of logs, to obtain fundamental information for the analysis of the application.